Your new post is loading...

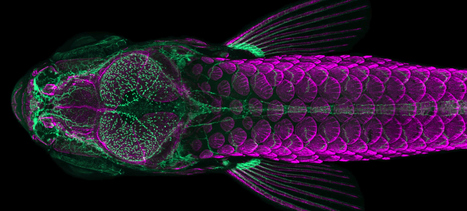

Two recent papers have demonstrated that during a critical early period of brain development, the gut’s microbiome — the assortment of bacteria that grow within in it — helps to mold a brain system that’s important for social skills later in life. Scientists found this influence in fish, but molecular and neurological evidence plausibly suggests that some form of it could also occur in mammals, including humans. In a recent paper published in PLOS Biology, researchers found that zebra fish who grew up lacking a gut microbiome were far less social than their peers with colonized colons, and the structure of their brains reflected the difference. In a related article in BMC Genomics in late September, they described molecular characteristics of the neurons affected by the gut bacteria. Equivalents of those neurons appear in rodents, and scientists can now look for them in other species, including humans.

The process of reconstructing experiences from human brain activity offers a unique lens into how the brain interprets and represents the world. Recently, the Google team and international collaborators introduced a method for reconstructing music from brain activity alone, captured using functional magnetic resonance imaging (fMRI). This approach uses either music retrieval or the MusicLM music generation model conditioned on embeddings derived from fMRI data. The generated music resembles the musical stimuli that human subjects experienced, with respect to semantic properties like genre, instrumentation, and mood. The scientists investigate the relationship between different components of MusicLM and brain activity through a voxel-wise encoding modeling analysis. Furthermore, they analyze which brain regions represent information derived from purely textual descriptions of music stimuli.

A new artificial intelligence system called a semantic decoder can translate a person's brain activity -- while listening to a story or silently imagining telling a story -- into a continuous stream of text. The system developed by researchers at The University of Texas at Austin might help people who are mentally conscious yet unable to physically speak, such as those debilitated by strokes, to communicate intelligibly again. The study, published in the journal Nature Neuroscience, was led by Jerry Tang, a doctoral student in computer science, and Alex Huth, an assistant professor of neuroscience and computer science at UT Austin. The work relies in part on a transformer model, similar to the ones that power Open AI's ChatGPT and Google's Bard. Unlike other language decoding systems in development, this system does not require subjects to have surgical implants, making the process noninvasive. Participants also do not need to use only words from a prescribed list. Brain activity is measured using an fMRI scanner after extensive training of the decoder, in which the individual listens to hours of podcasts in the scanner. Later, provided that the participant is open to having their thoughts decoded, their listening to a new story or imagining telling a story allows the machine to generate corresponding text from brain activity alone. "For a noninvasive method, this is a real leap forward compared to what's been done before, which is typically single words or short sentences," Huth said. "We're getting the model to decode continuous language for extended periods of time with complicated ideas." The result is not a word-for-word transcript. Instead, researchers designed it to capture the gist of what is being said or thought, albeit imperfectly. About half the time, when the decoder has been trained to monitor a participant's brain activity, the machine produces text that closely (and sometimes precisely) matches the intended meanings of the original words. For example, in experiments, a participant listening to a speaker say, "I don't have my driver's license yet" had their thoughts translated as, "She has not even started to learn to drive yet." Listening to the words, "I didn't know whether to scream, cry or run away. Instead, I said, 'Leave me alone!'" was decoded as, "Started to scream and cry, and then she just said, 'I told you to leave me alone.'" Beginning with an earlier version of the paper that appeared as a preprint online, the researchers addressed questions about potential misuse of the technology. The paper describes how decoding worked only with cooperative participants who had participated willingly in training the decoder. Results for individuals on whom the decoder had not been trained were unintelligible, and if participants on whom the decoder had been trained later put up resistance -- for example, by thinking other thoughts -- results were similarly unusable. "We take very seriously the concerns that it could be used for bad purposes and have worked to avoid that," Tang said. "We want to make sure people only use these types of technologies when they want to and that it helps them." In addition to having participants listen or think about stories, the researchers asked subjects to watch four short, silent videos while in the scanner. The semantic decoder was able to use their brain activity to accurately describe certain events from the videos. The system currently is not practical for use outside of the laboratory because of its reliance on the time need on an fMRI machine. But the researchers think this work could transfer to other, more portable brain-imaging systems, such as functional near-infrared spectroscopy (fNIRS). "fNIRS measures where there's more or less blood flow in the brain at different points in time, which, it turns out, is exactly the same kind of signal that fMRI is measuring," Huth said. "So, our exact kind of approach should translate to fNIRS," although, he noted, the resolution with fNIRS would be lower.

Psycholinguist Giosuè Baggio sheds light on the thrilling, evolving field of neurolinguistics, where neuroscience and linguistics meet. What exactly is language? At first thought, it’s a continuous flow of sounds we hear, sounds we make, scribbles on paper or on a screen, movements of our hands, and expressions on our faces. But if we pause for a moment, we find that behind this rich experiential display is something different: the smaller and larger building blocks of a Lego-like game of construction, with parts of words, words, phrases, sentences, and larger structures still. We can choose the pieces and put them together with some freedom, but not anything goes. There are rules, constraints. And no half measures. Either a sound is used in a word, or it’s not; either a word is used in a sentence, or it’s not. But unlike Lego, language is abstract: Eventually, one runs out of Lego bricks, whereas there could be no shortage of the sound b, and no cap on reusing the word “beautiful” in as many utterances as there are beautiful things to talk about. Language is a calculus It’s tempting to see languages as mathematical systems of some kind. Indeed, languages are calculi, in a very real sense, as real as the senses in which they are changing historical objects, means of communication, inner voices, vehicles of identity, instruments of persuasion, and mediums of great art. But while all these aspects of language strike us almost immediately, as they have philosophers for centuries, the connection between language and computation is not immediately apparent — nor do all scholars agree that it is even right to make it. It took all the ingenuity of linguists, like Noam Chomsky, and logicians, like Richard Montague, starting in the 1950s, to build mathematical systems that could capture language. Chomsky-style calculi tell us what words can go where in a sentence’s structure (syntax); Montague-style calculi tell us how language expresses relations between sets (semantics). They also remind us that no language could function without operations that put together words and ideas in the right ways: The sentence “I want that beautiful tree in our garden” is not a random configuration of words; its meaning is not completely open to interpretation — it is the tree, not the garden, that is beautiful; it is the garden, not the tree, that is ours. Language in the brain At this point, most linguists would probably be content with saying that calculi are handy constructs, tools we need in order to make rational sense of the jumble that is language. But if pressed, they would admit that the brain has to be doing some of that stuff, too. When you hear, read, or see “I want that beautiful tree in our garden,” something inside your head has to put together those words in the right way — not, say, in the way that yields the message that I want that tree in our beautiful garden. The language-as-calculus idea may well be the best model of language in the brain we currently have — or perhaps the worst, except for all the others. Linguists, logicians, and philosophers, for at least the first half of the 20th century, resisted the idea that language is in the brain. If it is anywhere at all, they estimated, it is out there, in the community of speakers. For neurologists such as Paul Broca and Carl Wernicke, active in the second half of the 19th century, the answer was different. They had shown that lesions to certain parts of the cerebral cortex could lead to specific disorders of spoken language, known as “aphasias.” It took an entire century — from around 1860 to 1960 — for the ideas that language is in the brain and that language is a calculus to meet, for neurology and linguistics to blend into neurolinguistics. If we look at what the brain does while people perform a language task, we find some of the signatures of a computational system at work. If we record electric or magnetic fields produced by the brain, for example, we find signals that are only sensitive to the identity of the sound one is hearing — say, that it is a b, instead of a d — and not to the pitch, volume, or any other concrete and contingent features of the speech sound. At some level, the brain treats each sound as an abstract variable in a calculus: a b like any other, not this particular b. The brain also reacts differently to grammar errors, as in “I want that beautiful trees in our garden,” and incongruities of meaning, as in “I want that beautiful democracy in our garden”: Rules and constraints matter. We are slowly figuring out how the brain operates with the abstract system that is language, how it arranges morphemes — the smallest grammatical units of meaning — into words, words into phrases, and so on, on the fly. We know that it often looks ahead in time, trying to anticipate what new information might arrive, and that words and ideas are combined by a few different operations, not just one, kicking in at slightly different times and originating in different parts of the brain.

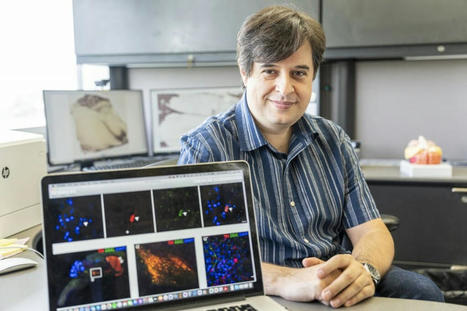

The cellular differences between these species may illuminate steps in their evolution and how those differences can be implicated in disorders, such as autism and intellectual disabilities, seen in humans. While the physical differences between humans and non-human primates are quite distinct, a new study reveals their brains may be remarkably similar. And yet, the smallest changes may make big differences in developmental and psychiatric disorders. Understanding the molecular differences that make the human brain distinct can help researchers study disruptions in its development. A new study, published recently in the journal Science by a team including University of Wisconsin-Madison neuroscience professor Andre Sousa, investigates the differences and similarities of cells in the prefrontal cortex -- the frontmost region of the brain, an area that plays a central role in higher cognitive functions -- between humans and non-human primates such as chimpanzees, Rhesus macaques and marmosets. The cellular differences between these species may illuminate steps in their evolution and how those differences can be implicated in disorders, such as autism and intellectual disabilities, seen in humans. Sousa, who studies the developmental biology of the brain at UW-Madison's Waisman Center, decided to start by studying and categorizing the cells in the prefrontal cortex in partnership with the Yale University lab where he worked as a postdoctoral researcher. "We are profiling the dorsolateral prefrontal cortex because it is particularly interesting. This cortical area only exists in primates. It doesn't exist in other species," Sousa says. "It has been associated with several relevant functions in terms of high cognition, like working memory. It has also been implicated in several neuropsychiatric disorders. So, we decided to do this study to understand what is unique about humans in this brain region." Sousa and his lab collected genetic information from more than 600,000 prefrontal cortex cells from tissue samples from humans, chimpanzees, macaques and marmosets. They analyzed that data to categorize the cells into types and determine the differences in similar cells across species. Unsurprisingly, the vast majority of the cells were fairly comparable. "Most of the cells are actually very similar because these species are relatively close evolutionarily," Sousa says. Sousa and his collaborators found five cell types in the prefrontal cortex that were not present in all four of the species. They also found differences in the abundancies of certain cell types as well as diversity among similar cell populations across species. When comparing a chimpanzee to a human the differences seem huge -- from their physical appearances down to the capabilities of their brains. But at the cellular and genetic level, at least in the prefrontal cortex, the similarities are many and the dissimilarities sparing. "Our lab really wants to know what is unique about the human brain. Obviously from this study and our previous work, most of it is actually the same, at least among primates," Sousa says.

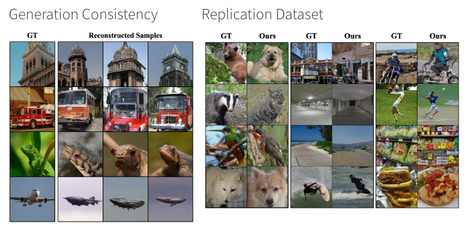

For the first time, we show that non-invasive brain recordings can be used to decode images with similar performance as invasive measures. Decoding visual stimuli from brain recordings aims to deepen our understanding of the human visual system and build a solid foundation for bridging human vision and computer vision through the Brain-Computer Interface. However, due to the scarcity of data annotations and the complexity of underlying brain information, it is challenging to decode images with faithful details and meaningful semantics.

In this work, AI scientists present MinD-Vis: Sparse Masked Brain Modeling with Double-Conditioned Diffusion Model for Vision Decoding. Specifically, by boosting the information capacity of representations learned in a large-scale resting-state fMRI dataset, they were able to show that the MinD-Vis framework reconstructed highly plausible images with semantically matching details from brain recordings with very few training pairs. The benchmarked model and its correlated method outperformed state-of-the-arts in both semantic mapping (100-way semantic classification) and generation quality (FID) by 66% and 41%, respectively. Exhaustive ablation studies are conducted to analyze this framework.

A human visual decoding system that only reply on limited annotations. State-of-the-art 100-way top-1 classification accuracy on GOD dataset: 23.9%, outperforming the previous best by 66%. State-of-the-art generation quality (FID) on GOD dataset: 1.67, outperforming the previous best by 41%.

What makes the human brain distinct from that of all other animals -- including even our closest primate relatives? In an analysis of cell types in the prefrontal cortex of four primate species, Yale researchers identified species-specific -- particularly human-specific -- features, they report Aug. 25, 2022 in the journal Science. And they found that what makes us human may also makes us susceptible to neuropsychiatric diseases. For the study, the researchers looked specifically at the dorsolateral prefrontal cortex (dlPFC), a brain region that is unique to primates and essential for higher-order cognition. Using a single cell RNA-sequencing technique, they profiled expression levels of genes in hundreds of thousands of cells collected from the dlPFC of adult humans, chimpanzees, macaque, and marmoset monkeys. "Today, we view the dorsolateral prefrontal cortex as the core component of human identity, but still we don't know what makes this unique in humans and distinguishes us from other primate species." said Nenad Sestan, the Harvey and Kate Cushing Professor of Neuroscience at Yale, professor of comparative medicine, of genetics. and of psychiatry, and the lead senior author of the paper. "Now we have more clues." To answer this, the researchers first asked whether there are there any cell types uniquely present in humans or other analyzed non-human primate species. After grouping cells with similar expression profiles they revealed 109 shared primate cell types but also five that were not common to all species. These included a type of microglia, or brain-specific immune cell, that was present only in humans and a second type shared by only humans and chimpanzees. The human-specific microglia type exists throughout development and adulthood, the researchers found, suggesting the cells play a role in maintenance of the brain upkeep rather than combatting disease. "We humans live in a very different environment with a unique lifestyle compared to other primate species; and glia cells, including microglia, are very sensitive to these differences," Sestan said. "The type of microglia found in the human brain might represent an immune response to the environment." An analysis of gene expression in the microglia revealed another human-specific surprise -- the presence of the gene FOXP2. This discovery raised great interest because variants of FOXP2 have been linked to verbal dyspraxia, a condition in which patients have difficulty producing language or speech. Other studies have also shown that FOXP2 is associated with other neuropsychiatric diseases, such as autism, schizophrenia, and epilepsy.

Synchron announced today that it completed the first-in-human brain-computer interface (BCI) implant in the U.S. The procedure, performed at Mount Sinai West in New York, represents the first such implant to occur in the U.S. using an endovascular BCI approach, which does not require invasive open-brain surgery. Dr. Shahram Majidi, assistant professor of neurosurgery, neurology and radiology at the Icahn School of Medicine at Mount Sinai, led the procedure, which was performed in the angiography suite with a minimally invasive, endovascular approach. Earlier this year, Synchron’s neuroscience chief explained how this type of catheter delivery could enable better brain implants. It was the first patient implant in Synchron’s Command trial, operating under FDA investigational device exemption to assess a permanently implanted BCI. Command will assess the safety and efficacy of the company’s motor BCI technology, including the Stentrode, in patients with severe paralysis, aiming to enable the patient to control digital devices hands-free. Study outcomes include the use of brain data to control digital devices and achieve improvements in functional independence, according to a news release. “This is an incredibly exciting milestone for the field, because of its implications and huge potential,” Majidi said in the release. “The implantation procedure went extremely well, and the patient was able to go home 48 hours after the surgery.” Notably, the first-in-human implant pulls Synchron ahead of Elon Musk’s Neuralink, which is working to develop an implant placed in the brain through a robot-assisted procedure. Neuralink requires implantation through the skull, and, while Musk and company officials said they planned to file for FDA approval for human trials in 2020, it has yet to receive such approval. Earlier this year, Neuralink and the University of California, Davis, were accused of “egregious violations of the Animal Welfare Act” by the Physicians Committee for Responsible Medicine (PCRM), citing documents obtained through a public records lawsuit. The allegations claimed that Neuralink caused extreme suffering in monkeys. Synchron’s Stentrode is implanted within the major cortex through the jugular vein in a minimally invasive endovascular procedure. Once implanted, it detects and wirelessly transmits motor intent using a proprietary digital language to allow severely paralyzed patients to control personal devices with hands-free point-and-click. The company intends to continue enrollment in the Command trial, while recently reported long-term safety results demonstrated the safe use of the device in four patients out to 12 months in an Australia-based trial. “The first-in-human implant of an endovascular BCI in the U.S. is a major clinical milestone that opens up new possibilities for patients with paralysis,” Synchron CEO and founder Dr. Tom Oxley said. “Our technology is for the millions of people who have lost the ability to use their hands to control digital devices. We’re excited to advance a scalable BCI solution to market, one that has the potential to transform so many lives.”

Chronic cough is globally prevalent across all age groups. This disorder is challenging to treat because many pulmonary and extra-pulmonary conditions can present with chronic cough, and cough can also be present without any identifiable underlying cause or be refractory to therapies that improve associated conditions. Most patients with chronic cough have cough hypersensitivity, which is characterized by increased neural responsiveness to a range of stimuli that affect the airways and lungs, and other tissues innervated by common nerve supplies. Cough hypersensitivity presents as excessive coughing often in response to relatively innocuous stimuli, causing significant psychophysical morbidity and affecting patients’ quality of life. Understanding the mechanism(s) that contribute to cough hypersensitivity and excessive coughing in different patient populations and across the lifespan is advancing and has contributed to the development of new therapies for chronic cough in adults. Owing to differences in the pathology, the organs involved and individual patient factors, treatment of chronic cough is progressing towards a personalized approach, and, in the future, novel ways to endotype patients with cough may prove valuable in management.

For decades, growth charts have been used by pediatricians as reference tools. The charts allow health professionals to plot and measure a child’s height and weight from birth to young adulthood. The percentile scores they provide, especially across multiple visits, help doctors screen for conditions such as obesity or inadequate growth, which fall at the extremes of these scores.

Meanwhile, it is possible to measure brain development with imaging technologies such as ultrasound, magnetic resonance imaging (MRI) and computerized tomography (CT). The development of these technologies has led to a wealth of research on how the brain changes, and each year, millions of clinical brain scans are performed worldwide. Despite this progress, there are few measures that are used to aid in monitoring brain development. But why?

In contrast to traditional growth charts, quantifying brain development and aging comes with a host of technical obstacles. Simply put, there is no tape measure for the brain. This makes it difficult to standardize measures across different studies. The costs and complexity of acquiring brain scans mean the data available to generate reference charts for a single study is limited.

We sought to address this by stitching together data across the largest possible combination of existing studies. We contacted many researchers to see if they would be willing to contribute to these reference charts. As evident from our large dataset, these requests were met with overwhelming enthusiasm. This turned a grassroots project into a collaborative global effort spanning six continents and dozens of institutions, the results of which have just been reported in the journal Nature.

Machine learning and neuroscience discover the mathematical system used by the brain to organize visual objects. When Plato set out to define what made a human a human, he settled on two primary characteristics: We do not have feathers, and we are bipedal (walking upright on two legs). Plato's characterization may not encompass all of what identifies a human, but his reduction of an object to its fundamental characteristics provides an example of a technique known as principal component analysis. Now, Caltech researchers have combined tools from machine learning and neuroscience to discover that the brain uses a mathematical system to organize visual objects according to their principal components. The work shows that the brain contains a two-dimensional map of cells representing different objects. The location of each cell in this map is determined by the principal components (or features) of its preferred objects; for example, cells that respond to round, curvy objects like faces and apples are grouped together, while cells that respond to spiky objects like helicopters or chairs form another group. The research was conducted in the laboratory of Doris Tsao (BS '96), professor of biology, director of the Tianqiao and Chrissy Chen Center for Systems Neuroscience and holder of its leadership chair, and Howard Hughes Medical Institute Investigator. A paper describing the study appears in the journal Nature on June 3, 2022. The researchers took the set of thousands of images they had shown primates and passed them through a deep network. They then examined activations of units found in the eight different layers of the deep network. Because there are thousands of units in each layer, it was difficult to discern any patterns to their firing. The lead researcher, Bao, decided to use principal component analysis to determine the fundamental parameters driving activity changes in each layer of the network. In one of the layers, Bao noticed something oddly familiar: one of the principal components was strongly activated by spiky objects, such as spiders and helicopters, and was suppressed by faces. This precisely matched the object preferences of the cells Bao had recorded from earlier in the no man's land network. What could account for this coincidence? One idea was that IT cortex might actually be organized as a map of object space, with x- and y-dimensions determined by the top two principal components computed from the deep network. This idea would predict the existence of face, body, and no man's land regions, since their preferred objects each fall neatly into different quadrants of the object space computed from the deep network. But one quadrant had no known counterpart in the brain: stubby objects, like radios or cups. Bao decided to show primates images of objects belonging to this "missing" quadrant as he monitored the activity of their IT cortexes. Astonishingly, he found a network of cortical regions that did respond only to stubby objects, as predicted by the model. This means the deep network had successfully predicted the existence of a previously unknown set of brain regions. Why was each quadrant represented by a network of multiple regions? Earlier, Tsao's lab had found that different face patches throughout IT cortex encode an increasingly abstract representation of faces. Bao found that the two networks he had discovered showed this same property: cells in more anterior regions of the brain responded to objects across different angles, while cells in more posterior regions responded to objects only at specific angles. This shows that the temporal lobe contains multiple copies of the map of object space, each more abstract than the preceding. Finally, the team was curious how complete the map was. They measured the brain activity from each of the four networks comprising the map as the primates viewed images of objects and then decoded the brain signals to determine what the primates had been looking at. The model was able to accurately reconstruct the images viewed by the primates. "We now know which features are important for object recognition," says Bao. "The similarity between the important features observed in both biological visual systems and deep networks suggests the two systems might share a similar computational mechanism for object recognition. Indeed, this is the first time, to my knowledge, that a deep network has made a prediction about a feature of the brain that was not known before and turned out to be true. I think we are very close to figuring out the how the primate brain solves the object recognition problem." The research paper is titled "A map of object space in primate inferotemporal cortex."

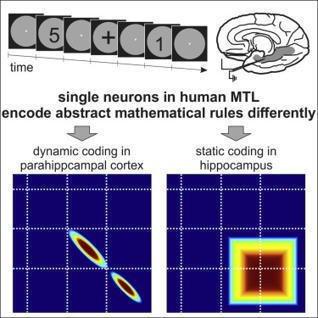

The brain has neurons that fire specifically during certain mathematical operations. This is shown by a recent study conducted by the Universities of Tübingen and Bonn. The findings indicate that some of the neurons detected are active exclusively during additions, while others are active during subtractions. They do not care whether the calculation instruction is written down as a word or a symbol. The results have now been published in the journal Current Biology. Most elementary school children probably already know that three apples plus two apples add up to five apples. However, what happens in the brain during such calculations is still largely unknown. The current study by the Universities of Bonn and Tübingen now sheds light on this issue. The researchers benefited from a special feature of the Department of Epileptology at the University Hospital Bonn. It specializes in surgical procedures on the brains of people with epilepsy. In some patients, seizures always originate from the same area of the brain. In order to precisely localize this defective area, the doctors implant several electrodes into the patients. The probes can be used to precisely determine the origin of the spasm. In addition, the activity of individual neurons can be measured via the wiring. Some neurons fire only when summing up Five women and four men participated in the current study. They had electrodes implanted in the so-called temporal lobe of the brain to record the activity of nerve cells. Meanwhile, the participants had to perform simple arithmetic tasks. "We found that different neurons fired during additions than during subtractions," explains Prof. Florian Mormann from the Department of Epileptology at the University Hospital Bonn. It was not the case that some neurons responded only to a "+" sign and others only to a "-" sign: "Even when we replaced the mathematical symbols with words, the effect remained the same," explains Esther Kutter, who is doing her doctorate in Prof. Mormann's research group. "For example, when subjects were asked to calculate '5 and 3', their addition neurons sprang back into action; whereas for '7 less 4,' their subtraction neurons did." This shows that the cells discovered actually encode a mathematical instruction for action. The brain activity thus showed with great accuracy what kind of tasks the test subjects were currently calculating: The researchers fed the cells' activity patterns into a self-learning computer program. At the same time, they told the software whether the subjects were currently calculating a sum or a difference. When the algorithm was confronted with new activity data after this training phase, it was able to accurately identify during which computational operation it had been recorded. Prof. Andreas Nieder from the University of Tübingen supervised the study together with Prof. Mormann. "We know from experiments with monkeys that neurons specific to certain computational rules also exist in their brains," he says. "In humans, however, there is hardly any data in this regard." During their analysis, the two working groups came across an interesting phenomenon: One of the brain regions studied was the so-called parahippocampal cortex. There, too, the researchers found nerve cells that fired specifically during addition or subtraction. However, when summing up, different addition neurons became alternately active during one and the same arithmetic task. Figuratively speaking, it is as if the plus key on the calculator were constantly changing its location. It was the same with subtraction. Researchers also refer to this as "dynamic coding."

Researchers funded by the federal BRAIN Initiative have mapped all the cell types — more than a hundred — in the motor cortex of the brain. The 17 studies, appearing online Oct. 6, 2021 in the journal Nature, are the result of five years of work by a huge consortium of researchers supported by the National Institutes of Health's Brain Research Through Advancing Innovative Neurotechnologies (BRAIN) Initiative to identify the myriad of different cell types in one portion of the brain. It is the first step in a long-term project to generate an atlas of the entire brain to help understand how the neural networks in our head control our body and mind and how they are disrupted in cases of mental and physical problems. "If you think of the brain as an extremely complex machine, how could we understand it without first breaking it down and knowing the parts?" asked cellular neuroscientist Helen Bateup, a University of California, Berkeley, associate professor of molecular and cell biology and co-author of the flagship paper that synthesizes the results of the other papers. "The first page of any manual of how the brain works should read: Here are all the cellular components, this is how many of them there are, here is where they are located and who they connect to." Individual researchers have previously identified dozens of cell types based on their shape, size, electrical properties and which genes are expressed in them. The new studies identify about five times more cell types, though many are subtypes of well-known cell types. For example, cells that release specific neurotransmitters, like gamma-aminobutyric acid (GABA) or glutamate, each have more than a dozen subtypes distinguishable from one another by their gene expression and electrical firing patterns. While the current papers address only the motor cortex, the BRAIN Initiative Cell Census Network (BICCN) -- created in 2017 -- endeavors to map all the different cell types throughout the brain, which consists of more than 160 billion individual cells, both neurons and support cells called glia. The BRAIN Initiative was launched in 2013 by then-President Barack Obama. "Once we have all those parts defined, we can then go up a level and start to understand how those parts work together, how they form a functional circuit, how that ultimately gives rise to perceptions and behavior and much more complex things," Bateup said. Together with former UC Berkeley professor John Ngai, Bateup and UC Berkeley colleague Dirk Hockemeyer have already used CRISPR-Cas9 to create mice in which a specific cell type is labeled with a fluorescent marker, allowing them to track the connections these cells make throughout the brain. For the flagship journal paper, the Berkeley team created two strains of "knock-in" reporter mice that provided novel tools for illuminating the connections of the newly identified cell types, she said. "One of our many limitations in developing effective therapies for human brain disorders is that we just don't know enough about which cells and connections are being affected by a particular disease and therefore can't pinpoint with precision what and where we need to target," said Ngai, who led UC Berkeley's Brain Initiative efforts before being tapped last year to direct the entire national initiative. "Detailed information about the types of cells that make up the brain and their properties will ultimately enable the development of new therapies for neurologic and neuropsychiatric diseases." Ngai is one of 13 corresponding authors of the flagship paper, which has more than 250 co-authors in all.

|

Creating brain-like computers with minimal energy requirements would revolutionize nearly every aspect of modern life. Funded by the Department of Energy, Quantum Materials for Energy Efficient Neuromorphic Computing ( Q-MEEN-C) — a nationwide consortium led by the University of California San Diego — has been at the forefront of this research. UC San Diego Assistant Professor of Physics Alex Frañó is co-director of Q-MEEN-C and thinks of the center’s work in phases. In the first phase, he worked closely with President Emeritus of University of California and Professor of Physics Robert Dynes, as well as Rutgers Professor of Engineering Shriram Ramanathan. Together, their teams were successful in finding ways to create or mimic the properties of a single brain element (such as a neuron or synapse) in a quantum material. Now, in phase two, new research from Q-MEEN-C, published in Nano Letters, shows that electrical stimuli passed between neighboring electrodes can also affect non-neighboring electrodes. Known as non-locality, this discovery is a crucial milestone in the journey toward new types of devices that mimic brain functions known as neuromorphic computing. Like many research projects now bearing fruit, the idea to test whether non-locality in quantum materials was possible came about during the pandemic. Physical lab spaces were shuttered, so the team ran calculations on arrays that contained multiple devices to mimic the multiple neurons and synapses in the brain. In running these tests, they found that non-locality was theoretically possible. "In the brain it’s understood that these non-local interactions are nominal — they happen frequently and with minimal exertion,” stated Frañó, one of the paper’s co-authors. “It’s a crucial part of how the brain operates, but similar behaviors replicated in synthetic materials are scarce. When labs reopened, they refined this idea further and enlisted UC San Diego Jacobs School of Engineering Associate Professor Duygu Kuzum, whose work in electrical and computer engineering helped them turn a simulation into an actual device. This involved taking a thin film of nickelate — a “quantum material” ceramic that displays rich electronic properties — inserting hydrogen ions, and then placing a metal conductor on top. A wire is attached to the metal so that an electrical signal can be sent to the nickelate. The signal causes the gel-like hydrogen atoms to move into a certain configuration and when the signal is removed, the new configuration remains.

An international team led by scientists at the University of Sydney has demonstrated nanowire networks can exhibit both short- and long-term memory like the human brain. The research has been published today in the journal Science Advances, led by Dr Alon Loeffler, who received his PhD in the School of Physics, with collaborators in Japan. "In this research we found higher-order cognitive function, which we normally associate with the human brain, can be emulated in non-biological hardware," Dr Loeffler said. "This work builds on our previous research in which we showed how nanotechnology could be used to build a brain-inspired electrical device with neural network-like circuitry and synapse-like signaling. "Our current work paves the way towards replicating brain-like learning and memory in non-biological hardware systems and suggests that the underlying nature of brain-like intelligence may be physical." Nanowire networks are a type of nanotechnology typically made from tiny, highly conductive silver wires that are invisible to the naked eye, covered in a plastic material, which are scattered across each other like a mesh. The wires mimic aspects of the networked physical structure of a human brain. Advances in nanowire networks could herald many real-world applications, such as improving robotics or sensor devices that need to make quick decisions in unpredictable environments. "This nanowire network is like a synthetic neural network because the nanowires act like neurons, and the places where they connect with each other are analogous to synapses," senior author Professor Zdenka Kuncic, from the School of Physics, said. "Instead of implementing some kind of machine learning task, in this study Dr Loeffler has actually taken it one step further and tried to demonstrate that nanowire networks exhibit some kind of cognitive function." To test the capabilities of the nanowire network, the researchers gave it a test similar to a common memory task used in human psychology experiments, called the N-Back task. For a person, the N-Back task might involve remembering a specific picture of a cat from a series of feline images presented in a sequence. An N-Back score of 7, the average for people, indicates the person can recognize the same image that appeared seven steps back. When applied to the nanowire network, the researchers found it could 'remember' a desired endpoint in an electric circuit seven steps back, meaning a score of 7 in an N-Back test."What we did here is manipulate the voltages of the end electrodes to force the pathways to change, rather than letting the network just do its own thing. We forced the pathways to go where we wanted them to go," Dr Loeffler said. "When we implement that, its memory had much higher accuracy and didn't really decrease over time, suggesting that we've found a way to strengthen the pathways to push them towards where we want them, and then the network remembers it. "Neuroscientists think this is how the brain works, certain synaptic connections strengthen while others weaken, and that's thought to be how we preferentially remember some things, how we learn and so on." The researchers said that when the nanowire network is constantly reinforced, it reaches a point where that reinforcement is no longer needed. At that point the information is consolidated into memory. "It's kind of like the difference between long-term memory and short-term memory in our brains," Professor Kuncic explained. "If we want to remember something for a long period of time, we really need to keep training our brains to consolidate that, otherwise it just kind of fades away over time. One task showed that the nanowire network can store up to seven items in memory at substantially higher than chance levels without reinforcement training and near-perfect accuracy with reinforcement training."

Many migratory species use the Earth’s magnetic field to keep their journeys on track. Now a study of a very non-migratory animal, the Drosophila fruit fly, shows the same capacity exists in some unexpected places. Perhaps humans are the rare ones because we don’t have this capability; if so, why? In the quest for survival, access to information about the world, particularly information your rivals lack, is exceptionally valuable. So it is not surprising animals have developed an astonishing array of ways to observe the world around them. Magnetic fields are one of these, but before humanity’s invention of powerful electromagnets these were generally very weak. The effort required to detect them was much greater than for light or sound. Consequently, biologists thought that only those animals that really needed to know their place on Earth – migratory pigeons or turtles for example – had exploited magnetoreception. However, a paper in Nature calls this into question. The possibility that Drosophila are capable of magnetoreception was raised in 2015 with the identification of a MagR protein produced by the flies that orientates itself to align with magnetic fields. The newly-published paper goes past this, revealing two methods by which the flies’ cells appear able to detect fields. The previous work identified photoreceptor proteins known as cryptochromes as being the sensors used by Drosophila to detect fields, with the capacity apparently failing in flies engineered not to produce cryptochromes leaving them magnetically blind. The authors of the new paper point to work showing cryptochromes do this by harnessing the powers of quantum super-positioning. However, the team also question the need for cryptochromes, showing their role may be substituted by a molecule that occurs in all living cells, humans included. Dr Alex Jones (no, not that one) of the National Physics Laboratory said in a statement, "The absorption of light by the cryptochrome results in movement of an electron within the protein which, due to quantum physics, can generate an active form of cryptochrome that occupies one of two states. The presence of a magnetic field impacts the relative populations of the two states, which in turn influences the 'active-lifetime' of this protein." The authors showed the molecule flavin adenine dinucleotide (FAD) binds to the cryptochromes to create their sensitivity to magnetism. However, they also found the cryptochromes may be an amplifier of FAD’s capacity, not essential to it. Even without cryptochromes, fly cells engineered to express extra FAD were able to respond to the presence of magnetic fields, as well as being highly sensitive to blue light in the presence of these fields. The magnetoreception required nothing more complex than an electron transfer to a side chain. The authors think cryptochromes may have evolved to take advantage of this. "This study may ultimately allow us to better appreciate the effects that magnetic field exposure might potentially have on humans,” said co-lead author Professor Ezio Rosato of the University of Leicester.

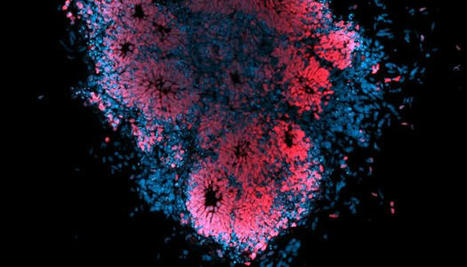

A University of Queensland-led project has used a ‘brain in a dish’ to study the effects of the Zika virus, taking research a step closer towards developing drugs to combat the infection. The mosquito-borne Zika virus is found in 89 countries and can penetrate the placenta of a pregnant mother to infect her baby, causing severe brain abnormalities. Dr Andrii Slonchak and Dr Alexander Khromykh from UQ’s School of Chemistry and Molecular Biosciences found a crucial element to Zika infection, viral noncoding RNA (sfRNA), helps it evade antiviral responses and cause cell-death in developing brains. “It’s a little like something out of a science fiction movie – we’re growing an artificial and microscopic human brain in a petri dish and testing the effect of the virus on its cells,” Dr Slonchak said. “Stem cells self-organize into organ-like structures, or organoids, and in this case they have the structure and tissue architecture of the developing human brain. “Our study shows the role of viral noncoding RNA in trans-placental infection in pregnant mice and in cell death in human brain organoids. “This finding gives us a whole new look at how the virus works its way into the developing brain and knowledge we can use to develop more effective antiviral drugs.” Dr Khromykh said while the number of recorded cases of Zika may seem low, at round 1.3 million in total globally, its impact warrants urgent attention. “Zika can cause significant abnormalities in babies, so there’s a race to understand how it works and how to stop it,” Dr Khromykh said. “Abnormalities can include optic nerve damage, smaller than expected head sizes, problems in the area of the brain that connects the two hemispheres and wasting of tissue in critical brain regions. “No cases of Zika-related birth defects have been reported in Australia, but given the unpredictable nature of viral outbreaks and the endemic nature of Zika in the Oceanic region, it very well might strike and we should be prepared for it.” The next step for researchers is to further understand how a specific viral protein, NS5, interacts with sfRNA at the molecular level and how this interaction helps the virus to escape antiviral response. “This information will help researchers develop antiviral drugs to block this interaction and combat Zika,” Dr Khromykh said.

Goldfish are capable of navigating on land, Israeli researchers have found, after training fish to drive. The team at Ben-Gurion University developed a FOV — a fish-operated vehicle. The robotic car is fitted with lidar, a remote sensing technology that uses pulsed laser light to collect data on the vehicle's ground location and the fish's whereabouts inside a mounted water tank. A computer, camera, electric motors and omni-wheels give the fish control of the vehicle. "Surprisingly, it doesn't take the fish a long time to learn how to drive the vehicle. They're confused at first," said researcher Shachar Givon. "They don't know what's going on but they're very quick to realize that there is a correlation between their movement and the movement of the machine that they're in," he added. Six goldfish, each receiving around 10 driving lessons, took part in the study. Each time one of them reached a target set by the researchers, it was rewarded with food. And some goldfish are better drivers than others, according to the research. "There were very good fish that were doing excellent and there were mediocre fish that showed control of the vehicle but were less proficient in driving it," said biology professor and neuroscientist Ronen Segev. Showing that a fish has the cognitive capability to navigate outside its natural environment of water can expand scientific knowledge of animals' essential navigation skills. "We humans think of ourselves as very special and many think of fish as primitive but this is not correct," said Segev. "There are other very important and very smart creatures."

Human and mouse neurons in a dish learned to play the video game Pong, researchers report October 12, 2022 in the journal Neuron. The experiments are evidence that even brain cells in a dish can exhibit inherent intelligence, modifying their behavior over time.

"From worms to flies to humans, neurons are the starting block for generalized intelligence," says first author Brett Kagan (@ANeuroExplorer), chief scientific officer at Cortical Labs in Melbourne, Australia. "So, the question was, can we interact with neurons in a way to harness that inherent intelligence?"

To start, the researchers connected the neurons to a computer in such a way where the neurons received feedback on whether their in-game paddle was hitting the ball. They monitored the neuron's activity and responses to this feedback using electric probes that recorded "spikes" on a grid.

The spikes got stronger the more a neuron moved its paddle and hit the ball. When neurons missed, their playstyle was critiqued by a software program created by Cortical Labs. This demonstrated that the neurons could adapt activity to a changing environment, in a goal-oriented way, in real time.

"We chose Pong due to its simplicity and familiarity, but, also, it was one of the first games used in machine learning, so we wanted to recognize that," says Kagan, who worked with collaborators from 10 other institutions on the project.

"An unpredictable stimulus was applied to the cells, and the system as a whole would reorganize its activity to better play the game and to minimize having a random response," he says. "You can also think that just playing the game, hitting the ball and getting predictable stimulation, is inherently creating more predictable environments."

The theory behind this learning is rooted in the free-energy principle. Simply put, the brain adapts to its environment by changing either its world view or its actions to better fit the world around it.

Pong wasn't the only game the research team tested. "You know when the Google Chrome browser crashes and you get that dinosaur that you can make jump over obstacles (Project Bolan). We've done that and we've seen some nice preliminary results, but we still have more work to do building new environments for custom purposes," says Kagan.

Future directions of this work have potential in disease modeling, drug discoveries, and expanding the current understanding of how the brain works and how intelligence arises.

Birds have impressive cognitive abilities and show a high level of intelligence. Compared to mammals of about the same size, the brains of birds also contain many more neurons. Now a new study reported in Current Biology on September 8 2022 helps to explain how birds can afford to maintain more brain cells: their neurons get by on less fuel in the form of glucose. “What surprised us the most is not, per se, that the neurons consume less glucose—this could have been expected by differences in the size of their neurons,” says Kaya von Eugen of Ruhr University Bochum, Germany. “But the magnitude of difference is so large that the size difference cannot be the only contributing factor. This implies there must be something additionally different in the bird brain that allows them to keep the costs so low.” A landmark study in 2016 showed that the bird brain holds many more neurons compared to a similarly sized mammalian brain, the researchers explained. Since brains generally are made up of energetically costly tissue, it raised a critical question: how are birds able to support so many neurons? To answer this question, von Eugen and colleagues set out to determine the neuronal energy budget of birds based on studies in pigeons. They used imaging methods that allowed them to estimate glucose metabolism in the birds. They also used modelling approaches to calculate the brain’s metabolic rate and glucose consumption. Their studies found that the pigeon brain consumes a surprisingly low amount of glucose (27.29 ± 1.57 μmol glucose per 100 g per min) when the animal is awake. That translates into a surprisingly low energy budget for the brain, especially when one compares it to mammals. It means that neurons in the bird brain consume three times less glucose than those in the mammalian brain, on average. In other words, their neurons are, for reasons that aren’t yet clear, less costly. Von Eugen says it’s possible the differences are related to birds’ higher body temperature or the specific layout of their brains. The bird brain is also smaller on average than the mammalian brain. But their brains retain impressive capabilities, perhaps in part due to their less costly but more numerous neurons.

Over several decades, neuroscientists have created a well-defined map of the brain’s “language network,” or the regions of the brain that are specialized for processing language. Found primarily in the left hemisphere, this network includes regions within Broca’s area, as well as in other parts of the frontal and temporal lobes. However, the vast majority of those mapping studies have been done in English speakers as they listened to or read English texts. MIT neuroscientists have now performed brain imaging studies of speakers of 45 different languages. The results show that the speakers’ language networks appear to be essentially the same as those of native English speakers. The findings, while generally not surprising, establish that the location and key properties of the language network appear to be universal. The work also lays the groundwork for future studies of linguistic elements that would be difficult or impossible to study in English speakers because English doesn’t have those features. “This study is very foundational, extending some findings from English to a broad range of languages,” says Evelina Fedorenko, the Frederick A. and Carole J. Middleton Career Development Associate Professor of Neuroscience at MIT and a member of MIT’s McGovern Institute for Brain Research. “The hope is that now that we see that the basic properties seem to be general across languages, we can ask about potential differences between languages and language families in how they are implemented in the brain, and we can study phenomena that don’t really exist in English.” Fedorenko is the senior author of the study, which appears today in Nature Neuroscience. Saima Malik-Moraleda, a PhD student in the Speech and Hearing Bioscience and Technology program at Harvard University, and Dima Ayyash, a former research assistant, are the lead authors of the paper. The precise locations and shapes of language areas differ across individuals, so to find the language network, researchers ask each person to perform a language task while scanning their brains with functional magnetic resonance imaging (fMRI). Listening to or reading sentences in one’s native language should activate the language network. To distinguish this network from other brain regions, researchers also ask participants to perform tasks that should not activate it, such as listening to an unfamiliar language or solving math problems. Several years ago, Fedorenko began designing these “localizer” tasks for speakers of languages other than English. While most studies of the language network have used English speakers as subjects, English does not include many features commonly seen in other languages. For example, in English, word order tends to be fixed, while in other languages there is more flexibility in how words are ordered. Many of those languages instead use the addition of morphemes, or segments of words, to convey additional meaning and relationships between words. “There has been growing awareness for many years of the need to look at more languages, if you want make claims about how language works, as opposed to how English works,” Fedorenko says. “We thought it would be useful to develop tools to allow people to rigorously study language processing in the brain in other parts of the world. There’s now access to brain imaging technologies in many countries, but the basic paradigms that you would need to find the language-responsive areas in a person are just not there.” For the new study, the researchers performed brain imaging of two speakers of 45 different languages, representing 12 different language families. Their goal was to see if key properties of the language network, such as location, left lateralization, and selectivity, were the same in those participants as in people whose native language is English. The researchers decided to use “Alice in Wonderland” as the text that everyone would listen to, because it is one of the most widely translated works of fiction in the world. They selected 24 short passages and three long passages, each of which was recorded by a native speaker of the language. Each participant also heard nonsensical passages, which should not activate the language network, and was asked to do a variety of other cognitive tasks that should not activate it. The team found that the language networks of participants in this study were found in approximately the same brain regions, and had the same selectivity, as those of native speakers of English. “Language areas are selective,” Malik-Moraleda says. “They shouldn’t be responding during other tasks such as a spatial working memory task, and that was what we found across the speakers of 45 languages that we tested.” Additionally, language regions that are typically activated together in English speakers, such as the frontal language areas and temporal language areas, were similarly synchronized in speakers of other languages. The researchers also showed that among all of the subjects, the small amount of variation they saw between individuals who speak different languages was the same as the amount of variation that would typically be seen between native English speakers.

The octopus is an exceptional organism with an extremely complex brain and cognitive abilities that are unique among invertebrates. So much so that in some ways it has more in common with vertebrates than with invertebrates. The neural and cognitive complexity of these animals could originate from a molecular analogy with the human brain, as discovered by a research paper recently published in BMC Biology and coordinated by Remo Sanges from SISSA of Trieste and by Graziano Fiorito from Stazione Zoologica Anton Dohrn of Naples. The research shows that the same 'jumping genes' are active both in the human brain and in the brain of two species, Octopus vulgaris, the common octopus, and Octopus bimaculoides, the Californian octopus. A discovery that could help us understand the secret of the intelligence of these fascinating organisms. Sequencing the human genome revealed as early as 2001 that over 45% of it is composed by sequences called transposons, so-called 'jumping genes' that, through molecular copy-and-paste or cut-and-paste mechanisms, can 'move' from one point to another of an individual's genome, shuffling or duplicating. In most cases, these mobile elements remain silent: they have no visible effects and have lost their ability to move. Some are inactive because they have, over generations, accumulated mutations; others are intact, but blocked by cellular defense mechanisms. From an evolutionary point of view even these fragments and broken copies of transposons can still be useful, as 'raw matter' that evolution can sculpt. Among these mobile elements, the most relevant are those belonging to the so-called LINE (Long Interspersed Nuclear Elements) family, found in a hundred copies in the human genome and still potentially active. It has been traditionally though that LINEs' activity was just a vestige of the past, a remnant of the evolutionary processes that involved these mobile elements, but in recent years new evidence emerged showing that their activity is finely regulated in the brain. There are many scientists who believe that LINE transposons are associated with cognitive abilities such as learning and memory: they are particularly active in the hippocampus, the most important structure of our brain for the neural control of learning processes. The octopus' genome, like ours, is rich in 'jumping genes', most of which are inactive. Focusing on the transposons still capable of copy-and-paste, the researchers identified an element of the LINE family in parts of the brain crucial for the cognitive abilities of these animals. The discovery, the result of the collaboration between Scuola Internazionale Superiore di Studi Avanzati, Stazione Zoologica Anton Dohrn and Istituto Italiano di Tecnologia, was made possible thanks to next generation sequencing techniques, which were used to analyze the molecular composition of the genes active in the nervous system of the octopus. "The discovery of an element of the LINE family, active in the brain of the two octopuses species, is very significant because it adds support to the idea that these elements have a specific function that goes beyond copy-and-paste," explains Remo Sanges, director of the Computational Genomics laboratory at SISSA, who started working at this project when he was a researcher at Stazione Zoologica Anton Dohrn of Naples. The study, published in BMC Biology, was carried out by an international team with more than twenty researchers from all over the world.

A completely paralyzed patient can now convey full sentences thanks to electrodes implanted in his brain. Medical research surgically placed brain-computer interfaces (BCIs) into a patient’s brain. The implants tracked electrical activity in the brain and translated the signals to commands to another device. According to a paper published in Nature detailing the procedure, the subject was able to message requests for beer, soup and massages from his nurses. He also asked to watch movies with his son. The participant suffers from Amyotrophic lateral sclerosis, or ALS, and is completely ‘locked-in,’ meaning he can’t use his limbs or make eye movements. In the past, BCIs have helped patients convey ‘yes’ or ‘no’ via thoughts or allowed partially paralyzed patients to move prosthetic limbs. “It’s really remarkable to be able to reestablish communication with someone in a completely locked-in state. To me, that’s a tremendous breakthrough and obviously quite meaningful for the research participant,” said Jaimie Henderson, M.D., a Stanford University neurosurgeon who wasn’t involved in the project. The individual received the innovative treatment through ALS Voice GmbH. A nonprofit that works with noncommunicative participants to incorporate BCIs and other technologies to improve their quality of life. The patient responded well to neurofeedback, which shows brain activity in real time so a patient can control their responses. When brain electrical activity alters, a computer would respond with ascending or descending tones. “Within two days, [the patient] was able to increase and decrease the frequency of a sound tone,” said Ujwal Chaudhary of ALS Voice gGmbH. His team developed software based on a computer system the patient’s family created. One of the patient’s first sentences was, “boys, it works so effortlessly.” In the future, Chaudhary wants to develop software with a word list that has an autocomplete function in the software. “There are many ways in which we could make it faster,” he said.

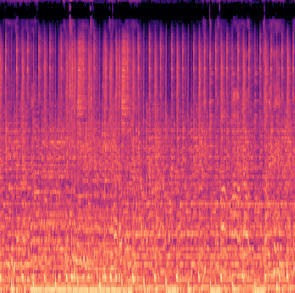

For the first time, MIT neuroscientists have identified a population of neurons in the human brain that lights up when we hear singing, but not other types of music. These neurons, found in the auditory cortex, appear to respond to the specific combination of voice and music, but not to either regular speech or instrumental music. Exactly what they are doing is unknown and will require more work to uncover, the researchers say. "The work provides evidence for relatively fine-grained segregation of function within the auditory cortex, in a way that aligns with an intuitive distinction within music," says Sam Norman-Haignere, a former MIT postdoc who is now an assistant professor of neuroscience at the University of Rochester Medical Center. The work builds on a 2015 study in which the same research team used functional magnetic resonance imaging (fMRI) to identify a population of neurons in the brain's auditory cortex that responds specifically to music. In the new work, the researchers used recordings of electrical activity taken at the surface of the brain, which gave them much more precise information than fMRI. "There's one population of neurons that responds to singing, and then very nearby is another population of neurons that responds broadly to lots of music. At the scale of fMRI, they're so close that you can't disentangle them, but with intracranial recordings, we get additional resolution, and that's what we believe allowed us to pick them apart," says Norman-Haignere. Norman-Haignere is the lead author of the study, which appears today in the journal Current Biology. Josh McDermott, an associate professor of brain and cognitive sciences, and Nancy Kanwisher, the Walter A. Rosenblith Professor of Cognitive Neuroscience, both members of MIT's McGovern Institute for Brain Research and Center for Brains, Minds and Machines (CBMM), are the senior authors of the study.

The ability to recognize familiar faces is fundamental to social interaction. This process provides visual information and activates social and personal knowledge about a person who is familiar. But how the brain processes this information across participants has long been a question. Distinct information about familiar faces is encoded in a neural code that is shared across brains, according to a new Dartmouth study published in the Proceedings of the National Academy of Sciences. “Within visual processing areas, we found that information about personally familiar and visually familiar faces is shared across the brains of people who have the same friends and acquaintances,” says first author Matteo Visconti di Oleggio Castello, Guarini ’18, who conducted this research as a graduate student in psychological and brain sciences at Dartmouth and is now a neuroscience post-doctoral scholar at the University of California, Berkeley. “The surprising part of our findings was that the shared information about personally familiar faces also extends to areas that are non-visual and important for social processing, suggesting that there is shared social information across brains.” For the study, the research team applied a method called hyperalignment, which creates a common representational space for understanding how brain activity is similar between participants. The team used data obtained from three fMRI tasks with 14 graduate students who had known each other for at least two years. In two of the tasks, participants were presented with images of four other personally familiar graduate students and four other visually familiar persons, who were previously unknown. In the third task, participants watched parts of The Grand Budapest Hotel. The movie data, which is publicly available, was used to apply hyperalignment and align participants’ brain responses into a common representational space. This allowed the researchers to use machine learning classifiers to predict what stimuli a participant was looking at based on the brain activity of the other participants. The results showed that the identity of visually familiar and personally familiar faces was decoded with accuracy across the brain in areas that are mostly involved in visual processing of faces. Outside of the visual areas however, there was not a lot of decoding. For visually familiar identities, participants only knew what the stimuli looked like; they did not know who these people were or have any other information about them.

|

Your new post is loading...

Your new post is loading...