Your new post is loading...

Creating brain-like computers with minimal energy requirements would revolutionize nearly every aspect of modern life. Funded by the Department of Energy, Quantum Materials for Energy Efficient Neuromorphic Computing ( Q-MEEN-C) — a nationwide consortium led by the University of California San Diego — has been at the forefront of this research. UC San Diego Assistant Professor of Physics Alex Frañó is co-director of Q-MEEN-C and thinks of the center’s work in phases. In the first phase, he worked closely with President Emeritus of University of California and Professor of Physics Robert Dynes, as well as Rutgers Professor of Engineering Shriram Ramanathan. Together, their teams were successful in finding ways to create or mimic the properties of a single brain element (such as a neuron or synapse) in a quantum material. Now, in phase two, new research from Q-MEEN-C, published in Nano Letters, shows that electrical stimuli passed between neighboring electrodes can also affect non-neighboring electrodes. Known as non-locality, this discovery is a crucial milestone in the journey toward new types of devices that mimic brain functions known as neuromorphic computing. Like many research projects now bearing fruit, the idea to test whether non-locality in quantum materials was possible came about during the pandemic. Physical lab spaces were shuttered, so the team ran calculations on arrays that contained multiple devices to mimic the multiple neurons and synapses in the brain. In running these tests, they found that non-locality was theoretically possible. "In the brain it’s understood that these non-local interactions are nominal — they happen frequently and with minimal exertion,” stated Frañó, one of the paper’s co-authors. “It’s a crucial part of how the brain operates, but similar behaviors replicated in synthetic materials are scarce. When labs reopened, they refined this idea further and enlisted UC San Diego Jacobs School of Engineering Associate Professor Duygu Kuzum, whose work in electrical and computer engineering helped them turn a simulation into an actual device. This involved taking a thin film of nickelate — a “quantum material” ceramic that displays rich electronic properties — inserting hydrogen ions, and then placing a metal conductor on top. A wire is attached to the metal so that an electrical signal can be sent to the nickelate. The signal causes the gel-like hydrogen atoms to move into a certain configuration and when the signal is removed, the new configuration remains.

Many migratory species use the Earth’s magnetic field to keep their journeys on track. Now a study of a very non-migratory animal, the Drosophila fruit fly, shows the same capacity exists in some unexpected places. Perhaps humans are the rare ones because we don’t have this capability; if so, why? In the quest for survival, access to information about the world, particularly information your rivals lack, is exceptionally valuable. So it is not surprising animals have developed an astonishing array of ways to observe the world around them. Magnetic fields are one of these, but before humanity’s invention of powerful electromagnets these were generally very weak. The effort required to detect them was much greater than for light or sound. Consequently, biologists thought that only those animals that really needed to know their place on Earth – migratory pigeons or turtles for example – had exploited magnetoreception. However, a paper in Nature calls this into question. The possibility that Drosophila are capable of magnetoreception was raised in 2015 with the identification of a MagR protein produced by the flies that orientates itself to align with magnetic fields. The newly-published paper goes past this, revealing two methods by which the flies’ cells appear able to detect fields. The previous work identified photoreceptor proteins known as cryptochromes as being the sensors used by Drosophila to detect fields, with the capacity apparently failing in flies engineered not to produce cryptochromes leaving them magnetically blind. The authors of the new paper point to work showing cryptochromes do this by harnessing the powers of quantum super-positioning. However, the team also question the need for cryptochromes, showing their role may be substituted by a molecule that occurs in all living cells, humans included. Dr Alex Jones (no, not that one) of the National Physics Laboratory said in a statement, "The absorption of light by the cryptochrome results in movement of an electron within the protein which, due to quantum physics, can generate an active form of cryptochrome that occupies one of two states. The presence of a magnetic field impacts the relative populations of the two states, which in turn influences the 'active-lifetime' of this protein." The authors showed the molecule flavin adenine dinucleotide (FAD) binds to the cryptochromes to create their sensitivity to magnetism. However, they also found the cryptochromes may be an amplifier of FAD’s capacity, not essential to it. Even without cryptochromes, fly cells engineered to express extra FAD were able to respond to the presence of magnetic fields, as well as being highly sensitive to blue light in the presence of these fields. The magnetoreception required nothing more complex than an electron transfer to a side chain. The authors think cryptochromes may have evolved to take advantage of this. "This study may ultimately allow us to better appreciate the effects that magnetic field exposure might potentially have on humans,” said co-lead author Professor Ezio Rosato of the University of Leicester.

Birds have impressive cognitive abilities and show a high level of intelligence. Compared to mammals of about the same size, the brains of birds also contain many more neurons. Now a new study reported in Current Biology on September 8 2022 helps to explain how birds can afford to maintain more brain cells: their neurons get by on less fuel in the form of glucose. “What surprised us the most is not, per se, that the neurons consume less glucose—this could have been expected by differences in the size of their neurons,” says Kaya von Eugen of Ruhr University Bochum, Germany. “But the magnitude of difference is so large that the size difference cannot be the only contributing factor. This implies there must be something additionally different in the bird brain that allows them to keep the costs so low.” A landmark study in 2016 showed that the bird brain holds many more neurons compared to a similarly sized mammalian brain, the researchers explained. Since brains generally are made up of energetically costly tissue, it raised a critical question: how are birds able to support so many neurons? To answer this question, von Eugen and colleagues set out to determine the neuronal energy budget of birds based on studies in pigeons. They used imaging methods that allowed them to estimate glucose metabolism in the birds. They also used modelling approaches to calculate the brain’s metabolic rate and glucose consumption. Their studies found that the pigeon brain consumes a surprisingly low amount of glucose (27.29 ± 1.57 μmol glucose per 100 g per min) when the animal is awake. That translates into a surprisingly low energy budget for the brain, especially when one compares it to mammals. It means that neurons in the bird brain consume three times less glucose than those in the mammalian brain, on average. In other words, their neurons are, for reasons that aren’t yet clear, less costly. Von Eugen says it’s possible the differences are related to birds’ higher body temperature or the specific layout of their brains. The bird brain is also smaller on average than the mammalian brain. But their brains retain impressive capabilities, perhaps in part due to their less costly but more numerous neurons.

For the first time, MIT neuroscientists have identified a population of neurons in the human brain that lights up when we hear singing, but not other types of music. These neurons, found in the auditory cortex, appear to respond to the specific combination of voice and music, but not to either regular speech or instrumental music. Exactly what they are doing is unknown and will require more work to uncover, the researchers say. "The work provides evidence for relatively fine-grained segregation of function within the auditory cortex, in a way that aligns with an intuitive distinction within music," says Sam Norman-Haignere, a former MIT postdoc who is now an assistant professor of neuroscience at the University of Rochester Medical Center. The work builds on a 2015 study in which the same research team used functional magnetic resonance imaging (fMRI) to identify a population of neurons in the brain's auditory cortex that responds specifically to music. In the new work, the researchers used recordings of electrical activity taken at the surface of the brain, which gave them much more precise information than fMRI. "There's one population of neurons that responds to singing, and then very nearby is another population of neurons that responds broadly to lots of music. At the scale of fMRI, they're so close that you can't disentangle them, but with intracranial recordings, we get additional resolution, and that's what we believe allowed us to pick them apart," says Norman-Haignere. Norman-Haignere is the lead author of the study, which appears today in the journal Current Biology. Josh McDermott, an associate professor of brain and cognitive sciences, and Nancy Kanwisher, the Walter A. Rosenblith Professor of Cognitive Neuroscience, both members of MIT's McGovern Institute for Brain Research and Center for Brains, Minds and Machines (CBMM), are the senior authors of the study.

Elon Musk today announced a breakthrough in his endeavor to sync the human brain with artificial intelligence. During a live-streamed demonstration involving farm animals and a stage, Musk said that his company Neuralink had built a self-contained neural implant that can wirelessly transmit detailed brain activity without the aid of external hardware. Musk demonstrated the device with live pigs, one of which had the implant in its brain. A screen above the pig streamed the electrical brain activity being registered by the device. “It’s like a Fitbit in your skull with tiny wires,” Musk said in his presentation. “You need an electrical thing to solve an electrical problem.” Musk’s goal is to build a neural implant that can sync up the human brain with AI, enabling humans to control computers, prosthetic limbs, and other machines using only thoughts. When asked during the live Q&A whether the device would ever be used for gaming, Musk answered an emphatic “yes.” Musk’s aspirations for this brain-computer interface (BCI) system are to be able to read and write from millions of neurons in the brain, translating human thought into computer commands, and vice versa. And it would all happen on a small, wireless, battery-powered implant unseen from the outside of the body. His company has been working on the technology for about four years. Teams of researchers globally have been experimenting with surgically implanted BCI systems in humans for over 15 years. The BrainGate consortium and other groups have used BCI to enable people with neurologic diseases and paralysis to operate tablets, type eight words per minute and control prosthetic limbs using only their thoughts.

Elon Musk demonstrated a working Neuralink brain-machine interface device implanted on a pig during a live broadcast. He said the purpose of the presentation was to recruit employees that would like to help develop the system. – “We’re not trying to raise money or do anything else, but the main purpose is to convince great people to come work at Neuralink, and help us bring the product to fruition; make it affordable and reliable and such that anyone who wants one can have one,” he said. Neuralink aims to solve brain-related issues with the brain chip called ‘Link’. Musk said the device could help solve memory loss, strokes, addiction, depression, anxiety, even monitor a users’ health to warn if they are about to have a heart attack. The interface could also help return mobility to paralyzed individuals through artificial limbs. The user would be able to move prosthetics with their thoughts via the Link brain-machine interface. Ultimately, Musk's vision for Neuralink is for humans to merge with Artificial Intelligence (AI) – “Such that the future of the world is controlled by the combined will of the people of Earth … I think that that’s obviously gonna’ be the future that we want,” he stated. The Neuralink device is currently in its initial phase of development. The design has changed since it was unveiled in 2019. Before, the device would sit behind a user’s ear and it was visible, now the design looks like a small coin, that will be placed above the skull. It’s “like a Fitbit in your skull with tiny wires,” Musk said a couple of times during the presentation, adding that Neuralink aims to connect humans to the device using Bluetooth to pair with an app on a cell phone. The Link chip features 1,024 tiny electrode threads that are threaded by a surgical robot inside the brain to stimulate neurons. “For the initial device, it’s read/write in every channel with about 1024 channels, all-day battery life that recharges overnight and has quite a long-range, so you can have the range being to your phone,” Musk said. “I should say that’s kind of an important thing, because this would connect to your phone, and so the application would be on your phone, and the Link communicating, by essentially Bluetooth low energy to the device in your head.” The Link device will be capable of inductive charging (wirelessly).

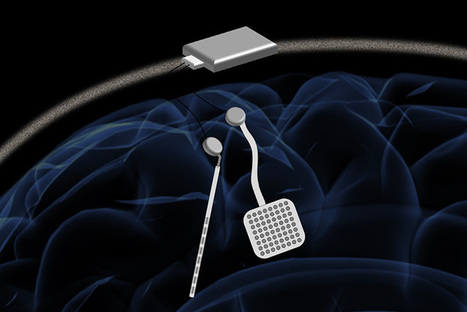

Scientists have developed a ew device which can listen to and stimulate electric current in the brain at the same time. A new neurostimulator developed by engineers at the University of California, Berkeley, can listen to and stimulate electric current in the brain at the same time, potentially delivering fine-tuned treatments to patients with diseases like epilepsy and Parkinson's. The new device, named the WAND, works like a "pacemaker for the brain," monitoring the brain's electrical activity and delivering electrical stimulation if it detects something amiss. These devices can be extremely effective at preventing debilitating tremors or seizures in patients with a variety of neurological conditions. But the electrical signatures that precede a seizure or tremor can be extremely subtle, and the frequency and strength of electrical stimulation required to prevent them is equally touchy. It can take years of small adjustments by doctors before the devices provide optimal treatment. WAND, which stands for wireless artifact-free neuromodulation device, is both wireless and autonomous, meaning that once it learns to recognize the signs of tremor or seizure, it can adjust the stimulation parameters on its own to prevent the unwanted movements. And because it is closed-loop -- meaning it can stimulate and record simultaneously -- it can adjust these parameters in real-time. "The process of finding the right therapy for a patient is extremely costly and can take years. Significant reduction in both cost and duration can potentially lead to greatly improved outcomes and accessibility," said Rikky Muller assistant professor of electrical engineering and computer sciences at Berkeley. "We want to enable the device to figure out what is the best way to stimulate for a given patient to give the best outcomes. And you can only do that by listening and recording the neural signatures." WAND can record electrical activity over 128 channels, or from 128 points in the brain, compared to eight channels in other closed-loop systems. To demonstrate the device, the team used WAND to recognize and delay specific arm movements in rhesus macaques. The device is described in a study that appeared today (Dec. 31, 2018) in Nature Biomedical Engineering.

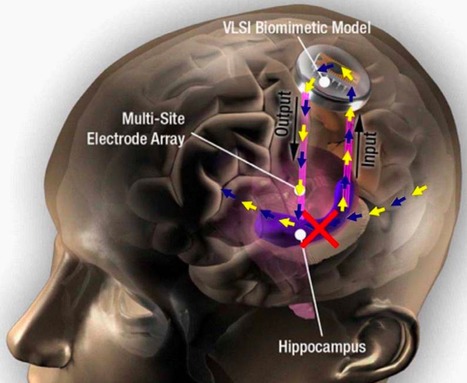

Scientists at Wake Forest Baptist Medical Center and the University of Southern California (USC) Viterbi School of Engineering have demonstrated a neural prosthetic system that can improve a memory by “writing” information “codes” (based on a patient’s specific memory patterns) into the hippocampus of human subjects via an electrode implanted in the hippocampus (a part of the brain involved in making new memories). In this pilot study, described in a paper published in Journal of Neural Engineering, epilepsy patients’ short-term memory performance showed a 35 to 37 percent improvement over baseline measurements, as shown in this video. The research, funded by the U.S. Defense Advanced Research Projects Agency (DARPA), offers evidence supporting pioneering research by USC scientist Theodore Berger, Ph.D. (a co-author of the paper), on an electronic system for restoring memory in rats (reported on KurzweilAI in 2011). “This is the first time scientists have been able to identify a patient’s own brain-cell code or pattern for memory and, in essence, ‘write in’ that code to make existing memory work better — an important first step in potentially restoring memory loss,” said the paper’s lead author Robert Hampson, Ph.D., professor of physiology/pharmacology and neurology at Wake Forest Baptist. The study focused on improving episodic memory (information that is new and useful for a short period of time, such as where you parked your car on any given day) — the most common type of memory loss in people with Alzheimer’s disease, stroke, and head injury. The researchers enrolled epilepsy patients at Wake Forest Baptist who were participating in a diagnostic brain-mapping procedure that used surgically implanted electrodes placed in various parts of the brain to pinpoint the origin of the patients’ seizures. Developing a hippocampal neural prosthetic to facilitate human memory encoding and recall.

Japanese scientists have create a creepy machine that can peer into your mind's eye with incredible accuracy. The AI studies electrical signals in the brain to work out exactly what images someone is looking at, and even thinking about. The technology opens the door to strange future scenarios, such as those portrayed in the series 'Black Mirror', where anyone can record and playback their memories. Chilling Black Mirror-style machine recreates the image you're thinking about by decoding your brain signals. The breakthrough relies on neural networks, which try to simulate the way the brain works in order to learn. These networks can be trained to recognize patterns in information - including speech, text data, or visual images - and are the basis for a large number of the developments in AI over recent years. They use input from the digital world to learn, with practical applications like Google's language translation services, Facebook's facial recognition software and Snapchat's image altering live filters. The Kyoto team's deep neural network was trained using 50 natural images and the corresponding fMRI results from volunteers who were looking at them. This recreated the images viewed by the volunteers. They then used a second type of AI called a deep generative network to check that they looked like real images, refining them to make them more recognizable.

Whales and dolphins (Cetaceans) live in tightly-knit social groups, have complex relationships, talk to each other and even have regional dialects - much like human societies. A major new study, published today in Nature Ecology & Evolution, has linked the complexity of Cetacean culture and behaviour to the size of their brains. The research was a collaboration between scientists at The University of Manchester, The University of British Columbia, Canada, The London School of Economics and Political Science (LSE) and Stanford University, United States. The study is first of its kind to create a large dataset of cetacean brain size and social behaviors. The team compiled information on 90 different species of dolphins, whales, and porpoises. It found overwhelming evidence that Cetaceans have sophisticated social and cooperative behavior traits, similar to many found in human culture. The study demonstrates that these societal and cultural characteristics are linked with brain size and brain expansion—also known as encephalisation. The long list of behavioral similarities includes many traits shared with humans and other primates such as: - complex alliance relationships - working together for mutual benefit

- social transfer of hunting techniques - teaching how to hunt and using tools

- cooperative hunting

- complex vocalizations, including regional group dialects - 'talking' to each other

- vocal mimicry and 'signature whistles' unique to individuals - using 'name' recognition

- interspecific cooperation with humans and other species - working with different species

- alloparenting - looking after youngsters that aren't their own

- social play

Dr Susanne Shultz, an evolutionary biologist in Manchester's School of Earth and Environmental Sciences, said: "As humans, our ability to socially interact and cultivate relationships has allowed us to colonize almost every ecosystem and environment on the planet. We know whales and dolphins also have exceptionally large and anatomically sophisticated brains and, therefore, have created a similar marine based culture. "That means the apparent co-evolution of brains, social structure, and behavioral richness of marine mammals provides a unique and striking parallel to the large brains and hyper-sociality of humans and other primates on land. Unfortunately, they won't ever mimic our great metropolises and technologies because they didn't evolve opposable thumbs." The team used the dataset to test the social brain hypothesis (SBH) and cultural brain hypothesis (CBH). The SBH and CBH are evolutionary theories originally developed to explain large brains in primates and land mammals. They argue that large brains are an evolutionary response to complex and information-rich social environments. However, this is the first time these hypotheses have been applied to 'intelligent' marine mammals on such a large scale. Dr Michael Muthukrishna, Assistant Professor of Economic Psychology at LSE, added: "This research isn't just about looking at the intelligence of whales and dolphins, it also has important anthropological ramifications as well. In order to move toward a more general theory of human behavior, we need to understand what makes humans so different from other animals. And to do this, we need a control group. Compared to primates, cetaceans are a more "alien" control group."

For most people, it is a stretch of the imagination to understand the world in four dimensions but a new study has discovered structures in the brain with up to eleven dimensions - ground-breaking work that is beginning to reveal the brain's deepest architectural secrets. Using algebraic topology in a way that it has never been used before in neuroscience, a team from the Blue Brain Project has uncovered a universe of multi-dimensional geometrical structures and spaces within the networks of the brain. The research, published today in Frontiers in Computational Neuroscience, shows that these structures arise when a group of neurons forms a clique: each neuron connects to every other neuron in the group in a very specific way that generates a precise geometric object. The more neurons there are in a clique, the higher the dimension of the geometric object. "We found a world that we had never imagined," says neuroscientist Henry Markram, director of Blue Brain Project and professor at the EPFL in Lausanne, Switzerland, "there are tens of millions of these objects even in a small speck of the brain, up through seven dimensions. In some networks, we even found structures with up to eleven dimensions." Markram suggests this may explain why it has been so hard to understand the brain. "The mathematics usually applied to study networks cannot detect the high-dimensional structures and spaces that we now see clearly." If 4D worlds stretch our imagination, worlds with 5, 6 or more dimensions are too complex for most of us to comprehend. This is where algebraic topology comes in: a branch of mathematics that can describe systems with any number of dimensions. The mathematicians who brought algebraic topology to the study of brain networks in the Blue Brain Project were Kathryn Hess from EPFL and Ran Levi from Aberdeen University. "Algebraic topology is like a telescope and microscope at the same time. It can zoom into networks to find hidden structures - the trees in the forest - and see the empty spaces - the clearings - all at the same time," explains Hess. In 2015, Blue Brain published the first digital copy of a piece of the neocortex - the most evolved part of the brain and the seat of our sensations, actions, and consciousness. In this latest research, using algebraic topology, multiple tests were performed on the virtual brain tissue to show that the multi-dimensional brain structures discovered could never be produced by chance. Experiments were then performed on real brain tissue in the Blue Brain's wet lab in Lausanne confirming that the earlier discoveries in the virtual tissue are biologically relevant and also suggesting that the brain constantly rewires during development to build a network with as many high-dimensional structures as possible.

Via Mariaschnee

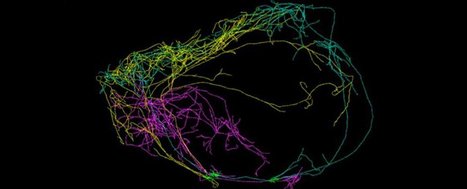

For the first time, scientists have detected a giant neuron wrapped around the entire circumference of a mouse's brain, and it's so densely connected across both hemispheres, it could finally explain the origins of consciousness. Using a new imaging technique, the team detected the giant neuron emanating from one of the best-connected regions in the brain, and say it could be coordinating signals from different areas to create conscious thought. This recently discovered neuron is one of three that have been detected for the first time in a mammal's brain, and the new imaging technique could help us figure out if similar structures have gone undetected in our own brains for centuries. At a recent meeting of the Brain Research through Advancing Innovative Neurotechnologies initiative in Maryland, a team from the Allen Institute for Brain Science described how all three neurons stretch across both hemispheres of the brain, but the largest one wraps around the organ's circumference like a "crown of thorns". You can see them highlighted in the image at the top of the page. Lead researcher Christof Koch told Sara Reardon at Nature that they've never seen neurons extend so far across both regions of the brain before. Oddly enough, all three giant neurons happen to emanate from a part of the brain that's shown intriguing connections to human consciousness in the past - the claustrum, a thin sheet of grey matter that could be the most connected structure in the entire brain, based on volume.

Via Wildcat2030, Miloš Bajčetić, Tania Gammage

The way our brains learn new information has puzzled scientists for decades - we come across so much new information daily, how do our brains store what's important, and forget the rest more efficiently than any computer we've built? It turns out that this could be controlled by the same laws that govern the formation of the stars and the evolution of the Universe, because a team of physicists has shown that, at the neuronal level, the learning process could ultimately be limited by the laws of thermodynamics. "The greatest significance of our work is that we bring the second law of thermodynamics to the analysis of neural networks," lead researcher Sebastian Goldt from the University of Stuttgart in Germany told Lisa Zyga from Phys.org. The second law of thermodynamics is one of the most famous physics laws we have, and it states that the total entropy of an isolated system always increases over time. Entropy is a thermodynamic quantity that's often referred to as a measure of disorder in a system. What that means is that, without extra energy being put into a system, transformations can't be reversed - things are going to get progressively more disordered, because it's more efficient that way. Entropy is currently the leading hypothesis for why the arrow of time only ever marches forwards. The second law of thermodynamics says that you can't un-crack an egg, because it would lower the Universe's entropy, and for that reason, there will always be a future and a past. But what does this have to do with the way our brains learn? Just like the bonding of atoms and the arrangement of gas particles in stars, our brains find the most efficient way to organise themselves. "The second law is a very powerful statement about which transformations are possible - and learning is just a transformation of a neural network at the expense of energy," Goldt explained to Zyga.

Via Kathy Bosiak

|

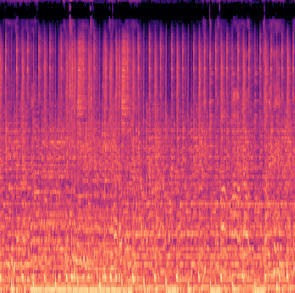

The process of reconstructing experiences from human brain activity offers a unique lens into how the brain interprets and represents the world. Recently, the Google team and international collaborators introduced a method for reconstructing music from brain activity alone, captured using functional magnetic resonance imaging (fMRI). This approach uses either music retrieval or the MusicLM music generation model conditioned on embeddings derived from fMRI data. The generated music resembles the musical stimuli that human subjects experienced, with respect to semantic properties like genre, instrumentation, and mood. The scientists investigate the relationship between different components of MusicLM and brain activity through a voxel-wise encoding modeling analysis. Furthermore, they analyze which brain regions represent information derived from purely textual descriptions of music stimuli.

Psycholinguist Giosuè Baggio sheds light on the thrilling, evolving field of neurolinguistics, where neuroscience and linguistics meet. What exactly is language? At first thought, it’s a continuous flow of sounds we hear, sounds we make, scribbles on paper or on a screen, movements of our hands, and expressions on our faces. But if we pause for a moment, we find that behind this rich experiential display is something different: the smaller and larger building blocks of a Lego-like game of construction, with parts of words, words, phrases, sentences, and larger structures still. We can choose the pieces and put them together with some freedom, but not anything goes. There are rules, constraints. And no half measures. Either a sound is used in a word, or it’s not; either a word is used in a sentence, or it’s not. But unlike Lego, language is abstract: Eventually, one runs out of Lego bricks, whereas there could be no shortage of the sound b, and no cap on reusing the word “beautiful” in as many utterances as there are beautiful things to talk about. Language is a calculus It’s tempting to see languages as mathematical systems of some kind. Indeed, languages are calculi, in a very real sense, as real as the senses in which they are changing historical objects, means of communication, inner voices, vehicles of identity, instruments of persuasion, and mediums of great art. But while all these aspects of language strike us almost immediately, as they have philosophers for centuries, the connection between language and computation is not immediately apparent — nor do all scholars agree that it is even right to make it. It took all the ingenuity of linguists, like Noam Chomsky, and logicians, like Richard Montague, starting in the 1950s, to build mathematical systems that could capture language. Chomsky-style calculi tell us what words can go where in a sentence’s structure (syntax); Montague-style calculi tell us how language expresses relations between sets (semantics). They also remind us that no language could function without operations that put together words and ideas in the right ways: The sentence “I want that beautiful tree in our garden” is not a random configuration of words; its meaning is not completely open to interpretation — it is the tree, not the garden, that is beautiful; it is the garden, not the tree, that is ours. Language in the brain At this point, most linguists would probably be content with saying that calculi are handy constructs, tools we need in order to make rational sense of the jumble that is language. But if pressed, they would admit that the brain has to be doing some of that stuff, too. When you hear, read, or see “I want that beautiful tree in our garden,” something inside your head has to put together those words in the right way — not, say, in the way that yields the message that I want that tree in our beautiful garden. The language-as-calculus idea may well be the best model of language in the brain we currently have — or perhaps the worst, except for all the others. Linguists, logicians, and philosophers, for at least the first half of the 20th century, resisted the idea that language is in the brain. If it is anywhere at all, they estimated, it is out there, in the community of speakers. For neurologists such as Paul Broca and Carl Wernicke, active in the second half of the 19th century, the answer was different. They had shown that lesions to certain parts of the cerebral cortex could lead to specific disorders of spoken language, known as “aphasias.” It took an entire century — from around 1860 to 1960 — for the ideas that language is in the brain and that language is a calculus to meet, for neurology and linguistics to blend into neurolinguistics. If we look at what the brain does while people perform a language task, we find some of the signatures of a computational system at work. If we record electric or magnetic fields produced by the brain, for example, we find signals that are only sensitive to the identity of the sound one is hearing — say, that it is a b, instead of a d — and not to the pitch, volume, or any other concrete and contingent features of the speech sound. At some level, the brain treats each sound as an abstract variable in a calculus: a b like any other, not this particular b. The brain also reacts differently to grammar errors, as in “I want that beautiful trees in our garden,” and incongruities of meaning, as in “I want that beautiful democracy in our garden”: Rules and constraints matter. We are slowly figuring out how the brain operates with the abstract system that is language, how it arranges morphemes — the smallest grammatical units of meaning — into words, words into phrases, and so on, on the fly. We know that it often looks ahead in time, trying to anticipate what new information might arrive, and that words and ideas are combined by a few different operations, not just one, kicking in at slightly different times and originating in different parts of the brain.

Machine learning and neuroscience discover the mathematical system used by the brain to organize visual objects. When Plato set out to define what made a human a human, he settled on two primary characteristics: We do not have feathers, and we are bipedal (walking upright on two legs). Plato's characterization may not encompass all of what identifies a human, but his reduction of an object to its fundamental characteristics provides an example of a technique known as principal component analysis. Now, Caltech researchers have combined tools from machine learning and neuroscience to discover that the brain uses a mathematical system to organize visual objects according to their principal components. The work shows that the brain contains a two-dimensional map of cells representing different objects. The location of each cell in this map is determined by the principal components (or features) of its preferred objects; for example, cells that respond to round, curvy objects like faces and apples are grouped together, while cells that respond to spiky objects like helicopters or chairs form another group. The research was conducted in the laboratory of Doris Tsao (BS '96), professor of biology, director of the Tianqiao and Chrissy Chen Center for Systems Neuroscience and holder of its leadership chair, and Howard Hughes Medical Institute Investigator. A paper describing the study appears in the journal Nature on June 3, 2022. The researchers took the set of thousands of images they had shown primates and passed them through a deep network. They then examined activations of units found in the eight different layers of the deep network. Because there are thousands of units in each layer, it was difficult to discern any patterns to their firing. The lead researcher, Bao, decided to use principal component analysis to determine the fundamental parameters driving activity changes in each layer of the network. In one of the layers, Bao noticed something oddly familiar: one of the principal components was strongly activated by spiky objects, such as spiders and helicopters, and was suppressed by faces. This precisely matched the object preferences of the cells Bao had recorded from earlier in the no man's land network. What could account for this coincidence? One idea was that IT cortex might actually be organized as a map of object space, with x- and y-dimensions determined by the top two principal components computed from the deep network. This idea would predict the existence of face, body, and no man's land regions, since their preferred objects each fall neatly into different quadrants of the object space computed from the deep network. But one quadrant had no known counterpart in the brain: stubby objects, like radios or cups. Bao decided to show primates images of objects belonging to this "missing" quadrant as he monitored the activity of their IT cortexes. Astonishingly, he found a network of cortical regions that did respond only to stubby objects, as predicted by the model. This means the deep network had successfully predicted the existence of a previously unknown set of brain regions. Why was each quadrant represented by a network of multiple regions? Earlier, Tsao's lab had found that different face patches throughout IT cortex encode an increasingly abstract representation of faces. Bao found that the two networks he had discovered showed this same property: cells in more anterior regions of the brain responded to objects across different angles, while cells in more posterior regions responded to objects only at specific angles. This shows that the temporal lobe contains multiple copies of the map of object space, each more abstract than the preceding. Finally, the team was curious how complete the map was. They measured the brain activity from each of the four networks comprising the map as the primates viewed images of objects and then decoded the brain signals to determine what the primates had been looking at. The model was able to accurately reconstruct the images viewed by the primates. "We now know which features are important for object recognition," says Bao. "The similarity between the important features observed in both biological visual systems and deep networks suggests the two systems might share a similar computational mechanism for object recognition. Indeed, this is the first time, to my knowledge, that a deep network has made a prediction about a feature of the brain that was not known before and turned out to be true. I think we are very close to figuring out the how the primate brain solves the object recognition problem." The research paper is titled "A map of object space in primate inferotemporal cortex."

Psychology researchers at UC Santa Cruz have found that playing games in virtual reality creates an effect called "time compression," where time goes by faster than you think. Grayson Mullen, who was a cognitive science undergraduate at the time, worked with Psychology Professor Nicolas Davidenko to design an experiment that tested how virtual reality's effects on a game player's sense of time differ from those of conventional monitors. The results are now published in the journal Timing & Time Perception.

If songbirds could appear on "The Masked Singer" reality TV competition, zebra finches would likely steal the show. That's because they can rapidly memorize the signature sounds of at least 50 different members of their flock, according to new research from the University of California, Berkeley. In recent findings published in the journal Science Advances, these boisterous, red-beaked songbirds, known as zebra finches, have been shown to pick one another out of a crowd (or flock) based on a particular peer's distinct song or contact call. Like humans who can instantly tell which friend or relative is calling by the timbre of the person's voice, zebra finches have a near-human capacity for language mapping. Moreover, they can remember each other's unique vocalizations for months and perhaps longer, the findings suggest. "The amazing auditory memory of zebra finches shows that birds' brains are highly adapted for sophisticated social communication," said study lead author Frederic Theunissen, a UC Berkeley professor of psychology, integrative biology and neuroscience. Theunissen and fellow researchers sought to gauge the scope and magnitude of zebra finches' ability to identify their feathered peers based purely on their unique sounds. As a result, they found that the birds, which mate for life, performed even better than anticipated. "For animals, the ability to recognize the source and meaning of a cohort member's call requires complex mapping skills, and this is something zebra finches have clearly mastered," Theunissen said. A pioneer in the study of bird and human auditory communication for at least two decades, Theunissen acquired a fascination and admiration for the communication skills of zebra finches through his collaboration with UC Berkeley postdoctoral fellow Julie Elie, a neuroethologist who has studied zebra finches in the forests of their native Australia. Their teamwork yielded groundbreaking findings about the communication skills of zebra finches. Zebra finches usually travel around in colonies of 50 to 100 birds, flying apart and then coming back together. Their songs are typically mating calls, while their distance or contact calls are used to identify where they are, or to locate one another. "They have what we call a 'fusion fission' society, where they split up and then come back together," Theunissen said. "They don't want to separate from the flock, and so, if one of them gets lost, they might call out 'Hey, Ted, we're right here.' Or, if one of them is sitting in a nest while the other is foraging, one might call out to ask if it's safe to return to the nest."

Humans use a variety of cues to infer an object's weight, including how easily objects can be moved. For example, if we observe an object being blown down the street by the wind, we can infer that it is light. A team of scientists tested now whether New Caledonian crows make this type of inference. After training that only one type of object (either light or heavy) was rewarded when dropped into a food dispenser, birds observed pairs of novel objects (one light and one heavy) suspended from strings in front of an electric fan. The fan was either on—creating a breeze which buffeted the light, but not the heavy, object—or off, leaving both objects stationary. In subsequent test trials, birds could drop one, or both, of the novel objects into the food dispenser. Despite having no opportunity to handle these objects prior to testing, birds touched the correct object (light or heavy) first in 73% of experimental trials, and were at chance in control trials. These results suggest that birds used pre-existing knowledge about the behavior exhibited by differently weighted objects in the wind to infer their weight, using this information to guide their choices.

Nissan unveiled research today that will enable vehicles to interpret signals from the driver’s brain, redefining how people interact with their cars. The company’s Brain-to-Vehicle, or B2V, technology promises to speed up reaction times for drivers and will lead to cars that keep adapting to make driving more enjoyable. Nissan will demonstrate capabilities of this exclusive technology at the CES 2018 trade show in Las Vegas. B2V is the latest development in Nissan Intelligent Mobility, the company’s vision for transforming how cars are driven, powered and integrated into society. “When most people think about autonomous driving, they have a very impersonal vision of the future, where humans relinquish control to the machines. Yet B2V technology does the opposite, by using signals from their own brain to make the drive even more exciting and enjoyable,” said Nissan Executive Vice President Daniele Schillaci. “Through Nissan Intelligent Mobility, we are moving people to a better world by delivering more autonomy, more electrification and more connectivity.” This breakthrough from Nissan is the result of research into using brain decoding technology to predict a driver’s actions and detect discomfort: - Predict: By catching signs that the driver’s brain is about to initiate a movement – such as turning the steering wheel or pushing the accelerator pedal – driver assist technologies can begin the action more quickly. This can improve reaction times and enhance manual driving.

- Detect: By detecting and evaluating driver discomfort, artificial intelligence can change the driving configuration or driving style when in autonomous mode.

Other possible uses include adjusting the vehicle’s internal environment, said Dr. Lucian Gheorghe, senior innovation researcher at the Nissan Research Center in Japan, who’s leading the B2V research. For example, the technology can use augmented reality to adjust what the driver sees and create a more relaxing environment. “The potential applications of the technology are incredible,” Gheorghe said. “This research will be a catalyst for more Nissan innovation inside our vehicles in the years to come.”

Via Daniel Perez-Marcos

The brains of close friends react to the world in similar ways, so does this mean we form natural echo chambers? You may have a lot of things in common with your friends and brain activity could be one of them. According to a new study that investigated the neural responses of those in real-world social networks, you are more likely to perceive the world in the same way your friends do and this can be seen in patterns of neural activity. The investigation by scientists at Dartmouth College, published today inNature Communications, examined the brains of 42 first-year graduate students, monitoring their responses to a collection of video clips. What they found was that close friends within this group had the most similar neural activity patterns, followed by friends-of-friends, then friends-of-friends-of-friends. The less subjects identified as friends, the more different their neural responses tended to be. "Neural responses to dynamic, naturalistic stimuli, like videos, can give us a window into people's unconstrained, spontaneous thought processes as they unfold,” says lead author Carolyn Parkinson, who at the time of the study was a postdoctoral fellow in psychological and brain sciences at Dartmouth. “Our results suggest that friends process the world around them in exceptionally similar ways." The 42 students were part of a 279-person cohort that filled out a survey to glean who they considered friends in the year group. The researchers gauged closeness based on mutually expressed friendships, and used this to estimate social distances between individuals. The selection of 42 subjects were each shown a range of videos, covering everything from politics to comedy, to elicit a variety of responses. The fact friends tended to neurologically respond similarly suggests these people perceive the world in similar ways. “Whether we naturally gravitate towards people who perceive, think about and respond to things like we do or whether we become more similar over time, through shared experiences, we don't know,” notes Thalia Wheatley, co-author of the study and an associate professor of psychological and brain sciences at Dartmouth.

A new optical illusion has been discovered, and it’s really quite striking. The strange effect is called the ‘curvature blindness’ illusion, and it’s described in a new paper from psychologist Kohske Takahashi of Chukyo University, Japan. Here’s an example of the illusion: A series of wavy horizontal lines are shown. All of the lines have exactly the same curvature.

Imagine replacing a damaged eye with a window directly into the brain — one that communicates with the visual part of the cerebral cortex by reading from a million individual neurons and simultaneously stimulating 1,000 of them with single-cell accuracy, allowing someone to see again.

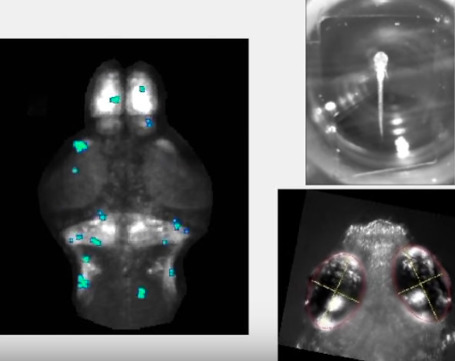

That’s the goal of a $21.6 million DARPA award to the University of California, Berkeley (UC Berkeley), one of six organizations funded by DARPA’s Neural Engineering System Design program announced this week to develop implantable, biocompatible neural interfaces that can compensate for visual or hearing deficits.*

The UCB researchers ultimately hope to build a device for use in humans. But the researchers’ goal during the four-year funding period is more modest: to create a prototype to read and write to the brains of model organisms — allowing for neural activity and behavior to be monitored and controlled simultaneously. These organisms include zebrafish larvae, which are transparent, and mice, via a transparent window in the skull. “The ability to talk to the brain has the incredible potential to help compensate for neurological damage caused by degenerative diseases or injury,” said project leader Ehud Isacoff, a UC Berkeley professor of molecular and cell biology and director of the Helen Wills Neuroscience Institute. “By encoding perceptions into the human cortex, you could allow the blind to see or the paralyzed to feel touch.” To communicate with the brain, the team will first insert a gene into neurons that makes fluorescent proteins, which flash when a cell fires an action potential. This will be accompanied by a second gene that makes a light-activated “optogenetic” protein, which stimulates neurons in response to a pulse of light. To read, the team is developing a miniaturized “light field microscope.” Mounted on a small window in the skull, it peers through the surface of the brain to visualize up to a million neurons at a time at different depths and monitor their activity. This microscope is based on the revolutionary “light field camera,” which captures light through an array of lenses and reconstructs images computationally in any focus. The combined read-write function will eventually be used to directly encode perceptions into the human cortex — inputting a visual scene to enable a blind person to see. The goal is to eventually enable physicians to monitor and activate thousands to millions of individual human neurons using light. Isacoff, who specializes in using optogenetics to study the brain’s architecture, can already successfully read from thousands of neurons in the brain of a larval zebrafish, using a large microscope that peers through the transparent skin of an immobilized fish, and simultaneously write to a similar number. The team will also develop computational methods that identify the brain activity patterns associated with different sensory experiences, hoping to learn the rules well enough to generate “synthetic percepts” — meaning visual images representing things being touched — by a person with a missing hand, for example. This technology has a lot of potential in the future.

A monkey’s brain builds a picture of a human face somewhat like a Mr. Potato Head — piecing it together bit by bit. The code that a monkey’s brain uses to represent faces relies not on groups of nerve cells tuned to specific faces — as has been previously proposed — but on a population of about 200 cells that code for different sets of facial characteristics. Added together, the information contributed by each nerve cell lets the brain efficiently capture any face, researchers report June 1 in Cell. “It’s a turning point in neuroscience — a major breakthrough,” says Rodrigo Quian Quiroga, a neuroscientist at the University of Leicester in England who wasn’t part of the work. “It’s a very simple mechanism to explain something as complex as recognizing faces.” Until now, Quiroga says, the leading explanation for the way the primate brain recognizes faces proposed that individual nerve cells, or neurons, respond to certain types of faces (SN: 6/25/05, p. 406). A system like that might work for the few dozen people with whom you regularly interact. But accounting for all of the peripheral people encountered in a lifetime would require a lot of neurons. It now seems that the brain might have a more efficient strategy, says Doris Tsao, a neuroscientist at Caltech. Tsao and coauthor Le Chang used statistical analyses to identify 50 variables that accounted for the greatest differences between 200 face photos. Those variables represented somewhat complex changes in the face — for instance, the hairline rising while the face becomes wider and the eyes becomes further-set. The researchers turned those variables into a 50-dimensional “face space,” with each face being a point and each dimension being an axis along which a set of features varied. Then, Tsao and Chang extracted 2,000 faces from that map, each linked to specific coordinates. While projecting the faces one at a time onto a screens in front of two macaque monkeys, the team recorded the activity in single neurons in parts of the monkey’s temporal lobe known to respond specifically to faces. All together, the recordings captured activity from 205 neurons.

Over the years, scientists have come up with a lot of ideas about why we sleep. Some have argued that it’s a way to save energy. Others have suggested that slumber provides an opportunity to clear away the brain’s cellular waste. Still others have proposed that sleep simply forces animals to lie still, letting them hide from predators. A pair of papers published on Thursday in the journal Science offer evidence for another notion: We sleep to forget some of the things we learn each day. In order to learn, we have to grow connections, or synapses, between the neurons in our brains. These connections enable neurons to send signals to one another quickly and efficiently. We store new memories in these networks. In 2003, Giulio Tononi and Chiara Cirelli, biologists at the University of Wisconsin-Madison, proposed that synapses grew so exuberantly during the day that our brain circuits got “noisy.” When we sleep, the scientists argued, our brains pare back the connections to lift the signal over the noise.

|

Your new post is loading...

Your new post is loading...

Oxycodone without a prescription

Phentermine 37.5 mg for sale

Phentremin weight loss

purchase Adderall online

Where Fentanyl Patches online

Where to buy Acxion Fentermina